- Vendoroo Newsletter

- Posts

- The Questions Every AI Buyer Has- “How Much Can I Trust It? ”

The Questions Every AI Buyer Has- “How Much Can I Trust It? ”

Autonomy is easy. Accountability moves the needle.

“How Much Can I Trust Agentic AI’s Decisions?”

🎧 Listen to the Audio Version Below

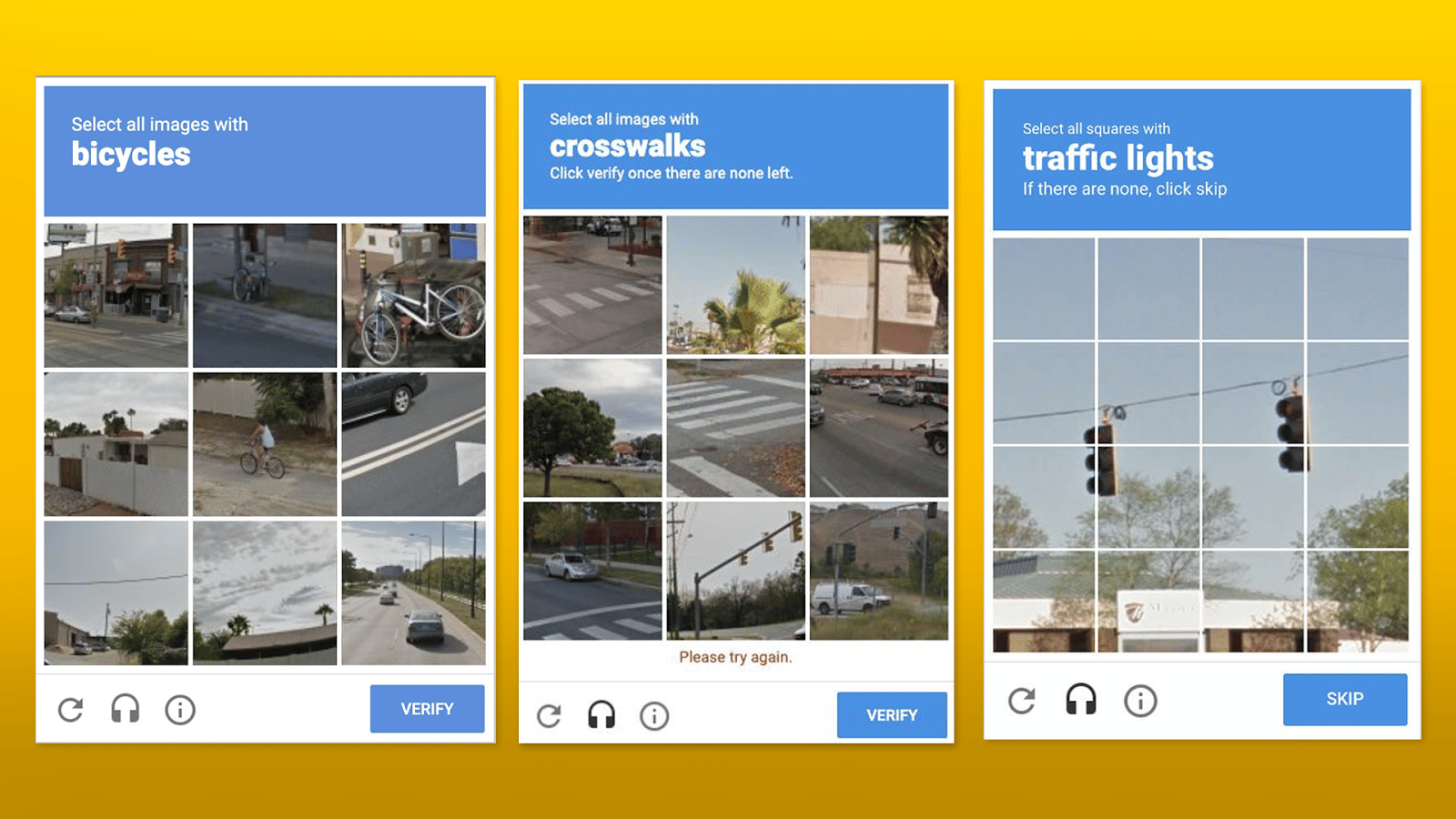

The next time you’re annoyed because a website asks you to click on every picture of a crosswalk or a bicycle to prove you aren’t a robot, pause for a moment and consider what’s actually happening.

Most people don’t realize that with every click, you’re not just passing a security test. You’re training an AI.

Even Google, with their computing power and data sets, understands something most companies still struggle with. To make AI smarter, human judgment is not optional. reCAPTCHA quietly teaches AI how to see the world more accurately, one human decision at a time. There’s an important lesson in that.

The best technology companies are not obsessed with replacing humans. They are obsessed with creating leverage. Technology is one form of leverage. People are another. The best outcomes come from using both intentionally.

In property management, though, we have been having a different conversation.

For years, the focus has been on offloading tasks. The SaaS generation of automation did exactly what it was designed to do: take the simplest, most repeatable workflows, the ones with a clear order of operations and minimal variability, and let software handle them.

That approach sped things up. It reduced noise. It helped teams breathe.

But property management decisions are rarely that clean.

They have inflection points. Context. Exceptions. Moments where judgment matters. Automation could accelerate parts of the workflow, but it could not truly own outcomes.

AI offers a different promise. Not just speed, but judgment inserted at the moments where decisions actually matter. For the first time, responsibility for real outcomes can be offloaded, not just tasks. That is exciting, and it is already happening.

But once AI is making decisions, a new question becomes unavoidable.

When the wrong decision is made, who is responsible for the judgment of your AI teammate?

Answering that question is the next frontier for the most evolved AI companies. And it is exactly where the rest of this conversation begins.

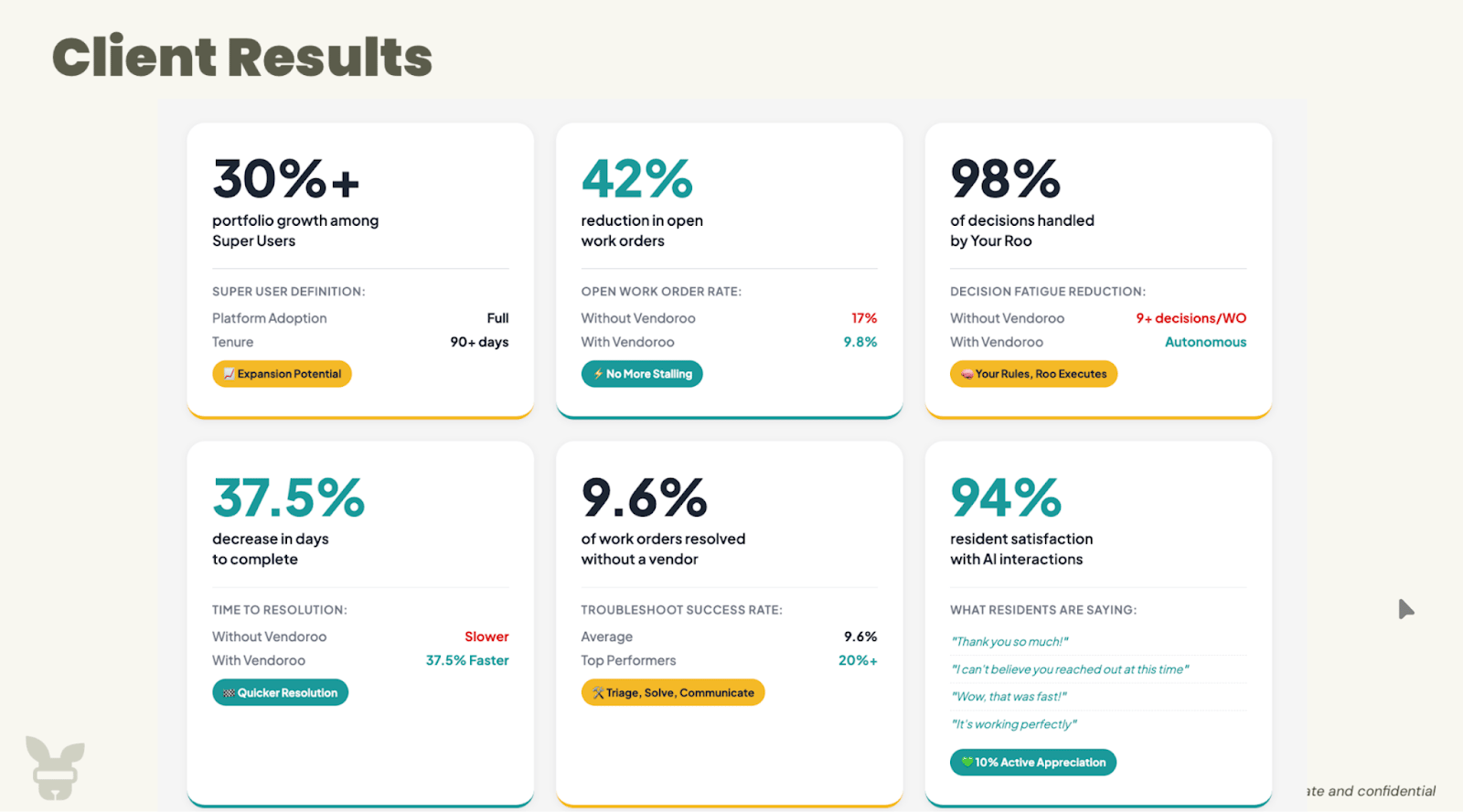

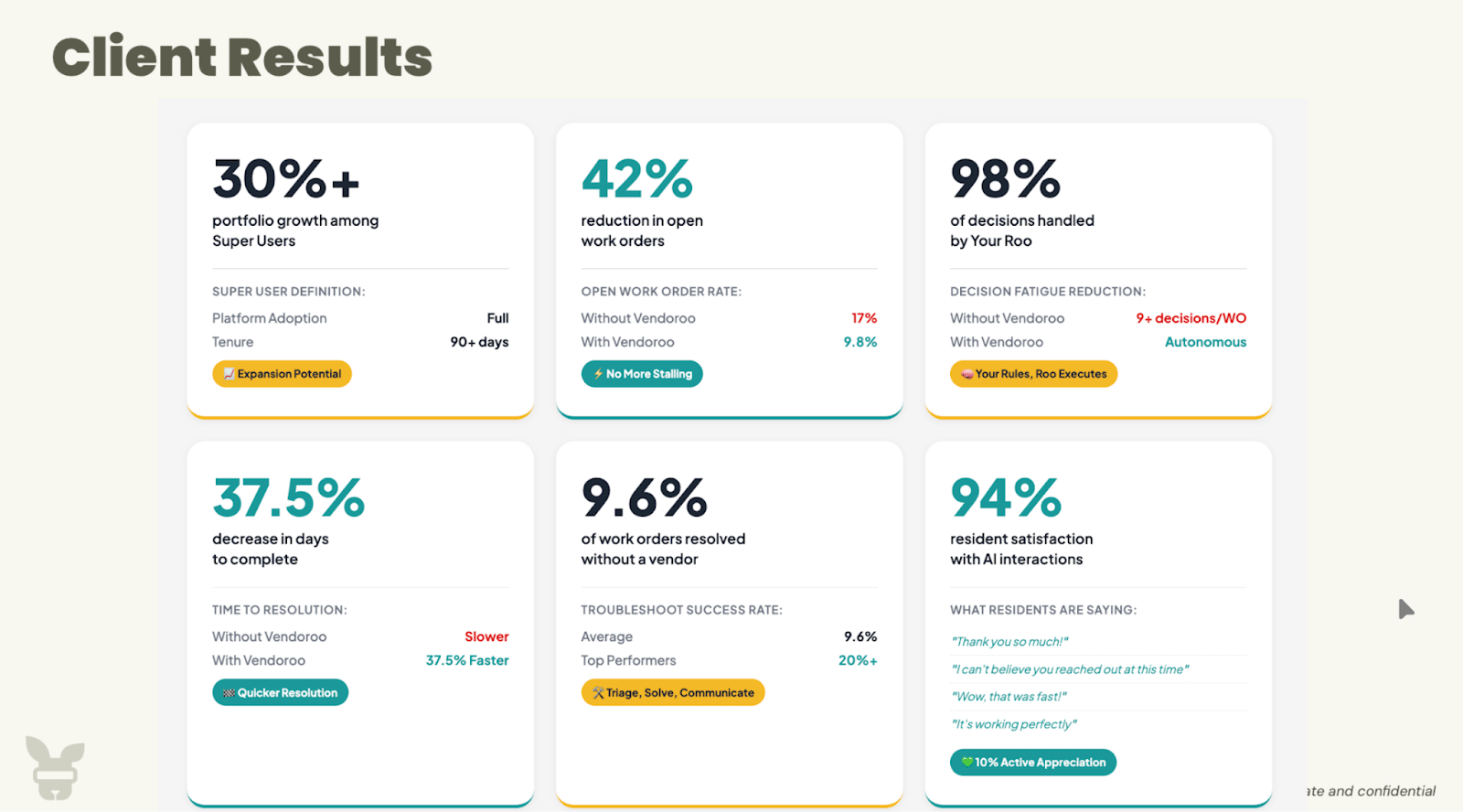

These are the results Vendoroo’s agents are producing for our clients :

If you haven’t checked out Vendoroo, recently, it’s time for you see our clients’ latest receipts. Book a call with our team what an AI teammate could do on your team.

Judgment Is the Job

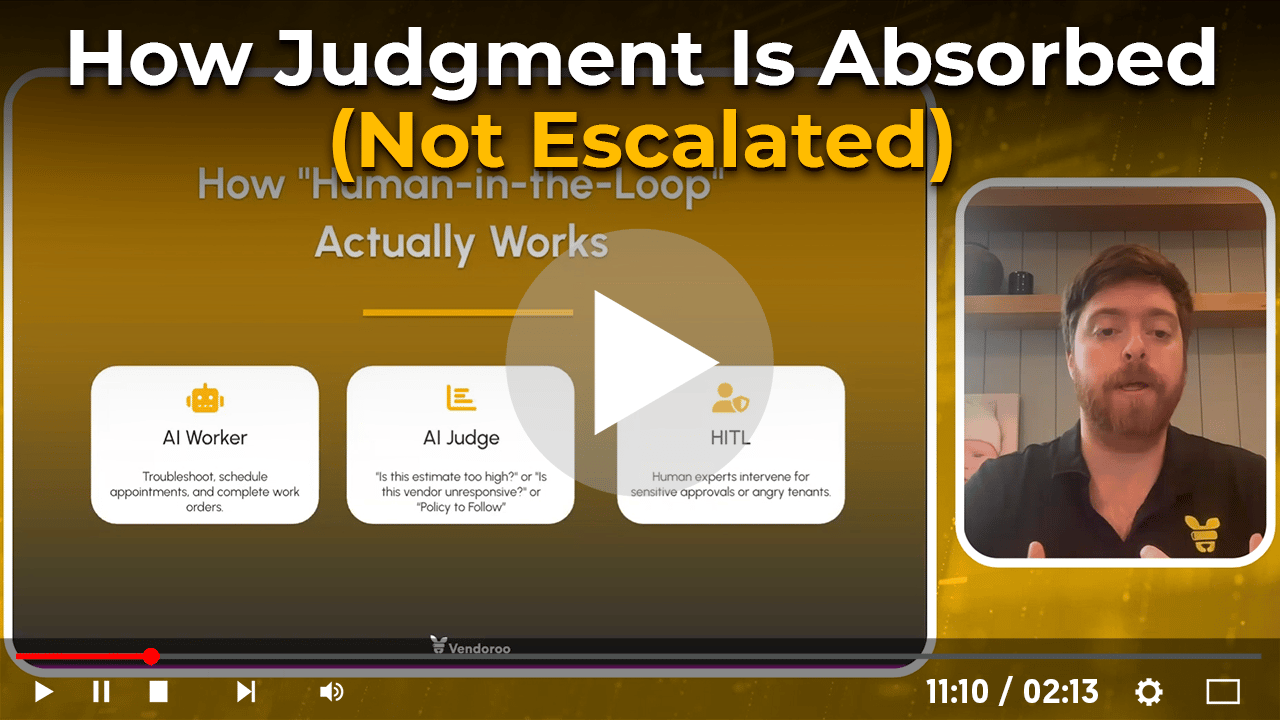

Tyler Nelson recently hosted a webinar explaining how we think about judgment and accountability in AI agents, because once AI starts making decisions, those two things matter more than anything else.

He used a simple analogy to make the point without getting technical.

Imagine teaching a child to cross the street. Automation is reflex. A car is coming, so you stop. Decision-making is judgment.

How fast is the car moving? How far away is it? Is the driver paying attention? Is the light about to change?

A child who never hesitates at an intersection is not impressive. They are dangerous.

And property management is not a 15-mile-per-hour school zone. It is a high-stakes environment filled with emotional tenants, aging infrastructure, owner expectations, and real financial and reputational exposure when things go wrong.

As AI moves beyond simple workflows and starts making decisions in that environment, teaching it when to hesitate and escalate is the only way it can ever be trusted.

There is a reason many platforms avoid this. The easiest way to manage risk is to keep AI shallow. Limit it to typical scenarios. Optimize for uniform behavior. Claim partial automation. That approach feels safe, but it guarantees the AI will never be more than a super powered checklist. Uncertainty is not an edge case in property management. It is the operating condition.

An AI agent you would consider an A-hire has to do something most systems are never designed to do. It has to know not just how to act, but when not to. It has to recognize when confidence is high enough to move forward and when judgment is required before a decision is made.

That perspective does not come from a traditional SaaS mindset.

Most AI products are built to execute efficiently, not to own outcomes. We came at this differently. Instead of asking how much work AI could automate, we asked how a system knows when to stop, check itself, and escalate before a decision creates real downstream impact.

When an AI raises its hand, it is not hesitation. It is awareness. And for property managers who live with the consequences of every decision, that distinction changes the questions that matter.

That is where real differentiation begins, and why the conversation about human-in-the-loop becomes unavoidable.

How Judgment Actually Scales

Once you accept that judgment is the job, the next question becomes practical.

How do you scale that judgment without dragging every decision back to a human? How do you protect outcomes without slowing the operation down or reintroducing the very bottlenecks AI was supposed to remove?

This is where most systems break.

They treat judgment as a binary choice. Either the AI decides, or a human does. Either it’s autonomous, or it escalates. That framing makes human involvement feel like a failure mode instead of a design choice.

We didn’t think about it that way.

If judgment is required, then the real challenge is not whether oversight exists, but where it lives and how it operates. To solve that, Vendoroo was built with three deliberate layers of judgment, each handling a different responsibility.

First, there is the AI itself.

This is the agent doing the work. It triages issues, routes vendors, follows client playbooks, applies policies, and moves work orders forward. When confidence is high, it acts. This is where speed and automation live.

Second, there is an AI whose job is not to act, but to evaluate.

This layer monitors decisions as they happen. It assesses confidence, checks for conflicts, and looks for signals that something doesn’t quite fit. When certainty drops below an acceptable threshold, it does not guess. It flags the decision for review.

This is how judgment begins without stopping the system.

Third, and only when needed, there is a human in the loop.

Not as a backstop for everything, and not as a replacement for the AI, but as a deliberate judge of edge cases. When a decision carries enough uncertainty or consequence, a human reviews it, resolves it, and teaches the system how to handle that situation better next time.

The important part is where that human sits.

The AI does not raise its hand to you. It raises its hand to us.

This structure allows judgment to exist without becoming noise. Decisions still move fast. Outcomes stay protected. And accountability lives upstream, where it belongs.

This is not about adding friction. It is about placing responsibility where it can actually be absorbed.

And once you see judgment as a layered system rather than a single switch, human-in-the-loop stops sounding like a compromise and starts looking like the only way decision-making AI can be trusted at scale.

TLDR: The Only Way To Truly Trust Your AI

Think back to those reCAPTCHA images.

What’s easy to miss is not the images themselves, but the principle behind them.

Even the most advanced AI systems in the world are not trusted to operate without judgment at moments where accuracy truly matters. When certainty drops, they are designed to pause, ask for help, and learn from a human decision. That is not a weakness. It is how intelligence compounds safely.

We have been comfortable with this model for years, as long as AI was learning.

What’s changed is that AI is no longer just learning. It is deciding.

And decision-making is exactly where that same principle becomes non-negotiable.

In property management, decisions carry real consequences. Financial exposure. Owner trust. Resident relationships. Pretending those decisions are always certain does not make the system smarter. It makes risk invisible.

The most advanced AI will not be the systems that never hesitate. They will be the systems that know when hesitation is required. Systems that recognize uncertainty, apply judgment intentionally, and absorb accountability before outcomes go sideways.

That is the difference between automation and a teammate, and it should change how you evaluate every AI system you consider.

Pablo Gonzalez

Chief Evangelist at Vendoroo

P.s. Don’t forget about what you saw earlier! These are the results Vendoroo’s agents are producing for our clients :

If you haven’t checked out Vendoroo, recently, it’s time for you see our clients’ latest receipts. Book a call with our team what an AI teammate could do on your team.